by Tobias Baumann. First published in 2018. Updated in 2022.

In a worst-case scenario, the future might contain astronomical amounts of suffering (so-called risks of astronomical future suffering or s-risks for short). I’ve argued before that the reduction of s-risks is a plausible moral priority from the perspective of many value systems, particularly for suffering-focused views. But how could s-risks come about?

This is a challenging question for many reasons. In general, it is hard to imagine what developments in the distant future might look like. Scholars in the Middle Ages could hardly have anticipated the atomic bomb. Given this great uncertainty, the following examples of s-risks are to be understood mostly as informed guesses for illustrative purposes, and not as a claim that these scenarios constitute the most likely s-risks. All of these examples put together might still account for only a small fraction of s-risks compared to currently unknown s-risks.

Going into detail on any specific scenario also carries a risk of availability bias. The availability bias is the tendency to view an example that comes readily to mind as more representative than it really is. This mental shortcut can be problematic if, as seems plausible, s-risks are actually distributed over a broad range of possible sources and scenarios. To counteract the availability bias, I will briefly outline many possible scenarios, rather than going into detail on any specific one.

Yet another challenge is the lack of clear feedback loops, especially if the scenarios in question have no comparable precedent in history. A conventional evidence-based approach is therefore not straightforwardly applicable.

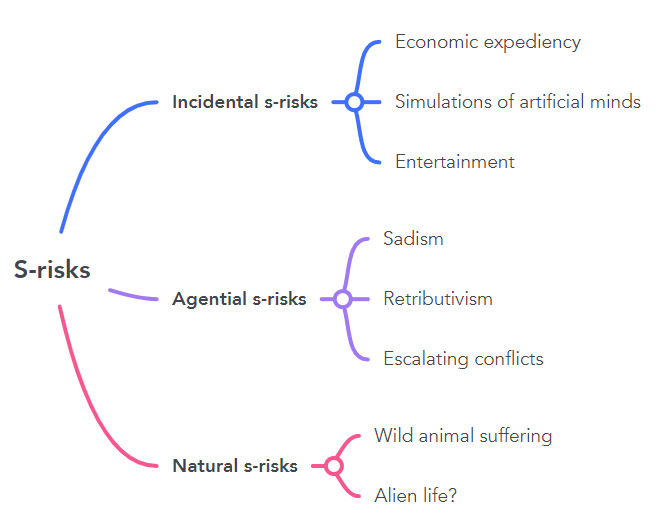

Given these challenges, it is most fruitful to explore many different types of s-risks. I will group the spectrum of possible scenarios into three categories: incidental s-risks, agential s-risks, and natural s-risks.

Incidental s-risks

Incidental s-risks arise when efficient ways to achieve a certain goal creates a lot of suffering in the process, without anyone actively trying to cause suffering per se. The agent or agents that cause the s-risk are either indifferent to that suffering, or they would prefer a suffering-free alternative in theory, but are not willing to bear the necessary costs in practice.

We can further divide incidental s-risks into subcategories based on the underlying motivation. In one class of scenarios, economic incentives and market forces cause large amounts of suffering because that suffering is a byproduct of high economic productivity. Animal suffering in factory farms is a case in point. It just so happens that the most economically efficient way to satisfy the demand for cheap animal products entails miserable conditions for farmed animals.1 Future technology might enable similar dynamics but on a much larger scale. For instance, the fact that evolution uses pain suggests that learning might be more efficient if negative reward signals are also used, and we might consider sufficiently advanced and complex reinforcement learners to be capable of suffering.

Another possibility involves suffering that is instrumental for information gain. Experiments on sentient creatures can be useful for scientific purposes, while causing serious harm to those experimented on. Again, future technology may enable such practices on a much larger scale. As discussed in the previous chapter, it may become possible to run a large number of simulations of artificial minds that are capable of suffering. And if there are instrumental reasons to run many such simulations, this could lead to vast amounts of suffering. For example, an advanced AI system might run many simulations to improve its knowledge of human psychology or in an attempt to predict what other agents will do in a certain situation.

It is also conceivable that complex simulations will be used for entertainment purposes in the future, which could cause serious suffering if these simulations contain artificially sentient beings. Many people enjoy violent forms of entertainment, as evidenced by countless historical examples, from gladiator fights to public executions. Another case in point is the content of today’s video games or movies. Such forms of entertainment are victimless as long as they are fictional — but in combination with sentient artificial minds, they could be a potential s-risk.

Agential s-risks

The previous examples are situations in which an efficient solution to a problem happens to involve a lot of suffering as an unintentional byproduct. A different class of s-risks, which I call agential s-risks, arises when an agent actively and intentionally wants to cause harm.

A simple example is sadism. A minority of future agents might, like some humans, derive pleasure from inflicting pain on others. This will hopefully be quite rare, and such tendencies might be kept in check through social pressures or legal protections. Still, it is conceivable that new technological capabilities will multiply the potential harm caused by sadistic acts.

Agential s-risks might also arise when people harbor strong feelings of hatred towards others. One relevant factor is the human tendency to form tribal identities and divide the world into an ingroup and an outgroup. In extreme cases, such tribalism spirals into a desire to harm the other side as much as possible. History features many well-known examples of atrocities committed against those that belong to the “wrong” religion, ethnic group, or political ideology. Similar dynamics could unfold on an astronomical scale in the future.

Another theme is retributivism: seeking vengeance for actual or perceived wrongdoing by others. A concrete example is excessive criminal punishment, as evidenced by historical and contemporary penal systems that have inflicted extraordinarily cruel forms of punishment.

These different themes can overlap or take place as part of an escalating conflict. For instance, large-scale warfare or terrorism involving advanced technology might amount to an s-risk. This is both because of the potential suffering of the combatants themselves and because extreme conflict tends to reinforce negative dynamics such as sadism, tribalism, and retributivism. War often brings out the worst in people. It is also conceivable that agents would, as part of an escalating conflict or war, make threats to deliberately bring about worst-case outcomes in an attempt to force the other side to yield.

A key factor that exacerbates agential s-risks is the presence of malevolent personality traits (like narcissism, psychopathy, or sadism) in powerful individuals. A case in point is the great harm caused by totalitarian dictators like Hitler or Stalin in the 20th century. (For a more detailed discussion of the concept of malevolence, its scientific basis, and possible steps to prevent malevolent leaders, see Reducing risks from malevolent actors.)

Natural s-risks

The categories of incidental and agential s-risks do not capture all possible scenarios. Suffering could also occur “naturally” without any powerful agents being involved. Consider, for instance, the suffering of animals living in nature. Wild animals are rarely at the forefront of our minds, but they actually constitute the vast majority of sentient beings on Earth. While many people tend to view nature as idyllic, the reality is that animals living in nature are subject to hunger, injuries, conflicts, painful diseases, predation, and other serious harms.

I will use the term natural s-risks to refer to the possibility that such “natural” suffering takes place (or will take place in the future) on an astronomical scale.2 For instance, it would be a natural s-risk if wild animal suffering were common on many planets, or if it eventually spreads throughout the cosmos, rather than remaining limited to Earth. (If human civilisation were to spread wild animal suffering throughout the universe, e.g. as part of the process of terraforming other planets, that would count as an incidental s-risk.)

I have so far bracketed the question of how likely or severe the different types of s-risks are. This is partly because the distribution of expected future suffering is an open question that is subject to great uncertainty. Nevertheless, natural s-risks generally seem less worrisome than the other categories. The available evidence — such as the non-observation of any alien lifeforms — does not suggest that wild animal suffering (or anything comparable) is common in the universe. And there is also not much reason, as far as I can tell, to think that a new source of natural suffering (of astronomical proportions) will come into existence in the future.

Other classifications

The classification I have presented so far is based on the mechanisms through which astronomical future suffering might come about. Of course, this is not the only way to classify s-risks.

Another helpful category are known and unknown s-risks. A known s-risk is a scenario that we can already conceive of at this point. Yet our imagination is often limited. Unknown s-risks may emerge in scenarios that we never thought of or cannot even comprehend (akin to the atomic bomb from a medieval perspective). It is possible that unanticipated mechanisms will lead to large amounts of incidental suffering (“unknown incidental s-risks”), that future agents will have unanticipated reasons to deliberately cause harm (“unknown agential s-risks”), or that new insights reveal that, despite appearances, our universe contains astronomical amounts of natural suffering (“unknown natural s-risks”).

We can also distinguish s-risks by the type of sentient beings who are affected. There are s-risks that affect humans, s-risks that affect nonhuman animals, and s-risks that affect artificial minds. The examples I have discussed were primarily focused on the two latter categories. This is because s-risks affecting non-humans are likely to be more neglected — although this does not mean that s-risks that mainly affect humans are unimportant.

Finally, it can be useful to distinguish between influenceable and non-influenceable s-risks. An s-risk is influenceable if it is possible in principle to do something to prevent it, even if this may be (for whatever reason) difficult in practice. For obvious reasons, we should focus on influenceable s-risks, and all examples I discussed so far are influenceable. Non-influenceable astronomical suffering, e.g. in a non-reachable part of our universe, is lamentable but not worth our attention or effort.3

Further research

Based on this typology, I recommend that further work considers the following questions:

- Which types of s-risk are most important in terms of the amount of expected suffering?

- Which types of s-risk are particularly tractable or neglected?

- What are the most significant risk factors that make different types of s-risks more likely, or more serious in scale if they occur?

- What are the most effective interventions for each respective type of s-risk? Are there interventions that address many different types of s-risk at the same time?

- Animal agriculture does not qualify as an example of a (realised) s-risk because an s-risk requires astronomical scope (by definition). That is, the amount of suffering would need to exceed current suffering by several orders of magnitude for something to constitute an s-risk.[↩]

- If such natural suffering is already taking place now, rather than coming into existence in the future, then it is perhaps inaccurate to call this a “risk”. But for simplicity, I will still use the s-risk terminology to refer to the “risk” that we won’t do what we could do to reduce such suffering.[↩]

- As a result of the expansion of the universe, distant objects recede from an observer, and the laws of physics therefore impose a limit on the reachable amount of space. It is worth noting, though, that some decision theories suggest that we may still have an effect on causally disconnected regions if there are correlations between the relevant decision-making processes.[↩]